By Zoe Corbyn, Technology Reporter

Getty Images

Getty ImagesModern computing’s appetite for electricity is increasing at an alarming rate.

By 2026 consumption by data centres, artificial intelligence (AI) and cryptocurrency could be as much as double 2022 levels, according to a recent report from the International Energy Agency (IEA).

It estimates that in 2026 energy consumption by those three sectors could be roughly equivalent to Japan’s annual energy needs.

Companies like Nvidia – whose computer chips underpin most AI applications today – are working on developing more energy efficient hardware.

But could an alternative path be to build computers with a fundamentally different type of architecture, one that is more energy efficient?

Some firms certainly think so, and are drawing on the structure and function of an organ which uses a fraction of the power of a conventional computer to perform more operations faster: the brain.

In neuromorphic computing, electronic devices imitate neurons and synapses, and are interconnected in a way that resembles the electrical network of the brain.

It isn’t new – researchers have been working on the technique since the 1980s.

But the energy requirements of the AI revolution are increasing the pressure to get the nascent technology into the real world.

Current systems and platforms exist primarily as research tools, but proponents say they could provide huge gains in energy efficiency,

Amongst those with commercial ambitions include hardware giants like Intel and IBM.

A handful of small companies are also on the scene. “The opportunity is there waiting for the company that can figure this out,” says Dan Hutcheson, an analyst at TechInsights. “[And] the opportunity is such that it could be an Nvidia killer”.

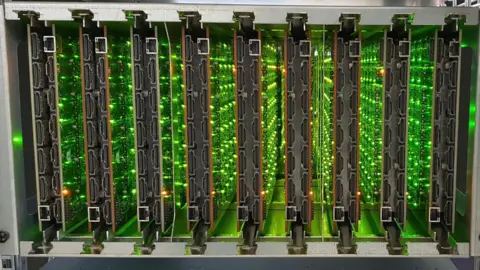

SpiNNcloud Systems

SpiNNcloud SystemsIn May SpiNNcloud Systems, a spinout of the Dresden University of Technology, announced it will begin selling neuromorphic supercomputers for the first time, and is taking pre-orders.

“We have reached the commercialisation of neuromorphic supercomputers in front of other companies,” says Hector Gonzalez, its co-chief executive.

It is a significant development says Tony Kenyon, a professor of nanoelectronic and nanophotonic materials at University College London who works in the field.

“While there still isn’t a killer app… there are lots of areas where neuromorphic computing will provide significant gains in energy efficiency and performance, and I’m sure we’ll start to see wide adoption of the technology as it matures,” he says.

Neuromorphic computing covers a range of approaches – from simply a more brain-inspired approach, to a near-total simulation of the human brain (which we are really nowhere near).

But there are some basic design properties that set it apart from conventional computing.

First, unlike conventional computers, neuromorphic computers don’t have separate memory and processing units. Instead, those tasks are performed together on one chip in a single location.

Removing that need to transfer data between the two reduces the energy used and speeds up processing time, notes Prof Kenyon.

Also common can be an event-driven approach to computing.

In contrast to conventional computing where every part of the system is always on and available to communicate with any other part all the time, activation in neuromorphic computing can be sparser.

The imitation neurons and synapses only activate in a moment of time when they have something to communicate, much the same way plenty of neurons and synapses in our brains only spring into action where there is a reason.

Doing work only when there is something to process also saves power.

And while modern computers are digital – using 1s or 0s to represent data – a neuromorphic computing can be analogue.

Historically important, that method of computing relies on continuous signals and can be useful where data coming from the outside world needs to be analysed.

However, for reasons of ease, most commercially oriented neuromorphic efforts are digital.

Commercial applications envisaged fall into two main categories.

One, which is where SpiNNcloud is focused, is in providing a more energy efficient and higher performance platform for AI applications – including image and video analysis, speech recognition and the large-language models that power chatbots such as ChatGPT.

Another is in “edge computing” applications – where data is processed not in the cloud, but in real time on connected devices, but which operate on power constraints. Autonomous vehicles, robots, cell phones and wearable technology could all benefit.

Technical challenges, however, remain. Long regarded as a main stumbling block to the advance of neuromorphic computing generally is developing the software needed for the chips to run.

While having the hardware is one thing, it must be programmed to work, and that can require developing from scratch a totally different style of programming to that used by conventional computers.

“The potential for these devices is huge… the problem is how do you make them work,” sums up Mr Hutcheson, who predicts it will be at least a decade, if not two, before the benefits of neuromorphic computing are really felt.

There are also issues with cost. Whether they use silicon, as the commercially oriented efforts do, or other materials, creating radically new chips is expensive, notes Prof Kenyon.

Intel

IntelIntel’s current prototype neuromorphic chip is called Loihi 2.

In April, the company announced it had brought together 1,152 of them to create Hala Point, a large-scale neuromorphic research system comprising more than 1.15 billion fake neurons and 128 billion fake synapses.

With a neuron capacity roughly equivalent to an owl brain, Intel claims is the world’s largest system to date.

At the moment it is still a research project for Intel.

“[But Hala Point] is showing that there’s some real viability here for applications to use AI,” says Mike Davies, director of Intel’s neuromorphic computing lab.

About the size of a microwave oven, Hala Point is “commercially relevant” and “rapid progress” is being made on the software side, he says.

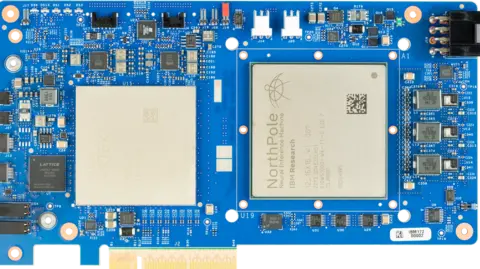

IBM calls its latest brain-inspired prototype chip NorthPole.

Unveiled last year, it is an evolution of its previous TrueNorth prototype chip. Tests show it is more energy efficient, space efficient and faster than any chip currently on the market, says Dharmendra Modha, the company’s chief scientist of brain-inspired computing. He adds that his group is now working to demonstrate chips can be dialed together into a larger system.

“Path to market will be at story to come,” he says. One of the big innovations with NorthPole, notes Dr Modha, is that it has been co-designed with the software so the full capabilities of the architecture can be exploited from the get-go.

Other smaller neuromorphic companies include BrainChip, SynSense and Innatera.

IBM

IBMSpiNNcloud’s supercomputer commercialises neuromorphic computing developed by researchers at both TU Dresden and the University of Manchester, under the umbrella of the EU’s Human Brain Project.

Those efforts have resulted in two research-purpose neuromorphic supercomputers: the SpiNNaker1 machine based at the University of Manchester consisting of over one billion neurons, and operational since 2018.

A second generation SpiNNaker2 machine at TU Dresden, which is currently in the process of being configured, has the capacity to emulate at least five billion neurons. The commercially available systems offered by SpiNNcloud can reach an even higher level of at least 10 billion neurons, says Mr Gonzalez.

The future will be one of different types of computing platforms – conventional, neuromorphic and quantum, which is another novel type of computing also on the horizon – all working together, says Prof Kenyon.